Quickly Implement 'Alexa Built-In' IoT Designs Using a Microcontroller-Based Kit

Contributed By DigiKey's North American Editors

2020-02-11

Voice assistants have rapidly evolved to become an important feature in any smart product. Among current cloud-based solutions, Amazon’s Alexa Voice Service (AVS) has emerged as the dominant voice assistant, providing a turnkey capability that leverages Amazon cloud resources for voice recognition and natural language processing.

For developers, however, the performance requirements and design complexity of AVS have proven a significant barrier to entry for smaller microprocessor-based devices for the connected home and Internet of Things (IoT). Designed as a drop-in solution and reference design for custom applications, a kit from NXP Semiconductors provides an Amazon AVS offering designed specifically for resource-constrained devices.

This article shows how developers can rapidly implement "Alexa Built-in" designs using an out-of-the-box solution from NXP.

What is AVS?

Since its appearance a decade ago, voice assistant technology has evolved rapidly, driving a growth market for smart speakers that market analysts estimate already includes about one-third of the US population. Among competing solutions, Amazon Echo smart speakers have gained a dominant share, leveraging the success of Amazon Web Services (AWS) in providing cloud-based resources for supporting third-party Echo apps, or Skills.

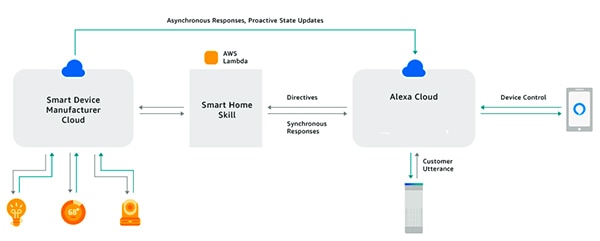

Using the Alexa Skills Kit (ASK) and associated application programming interfaces (APIs), developers can take advantage of the rapidly expanding installed base of Echo smart speakers to add some level of voice control to their connected devices. With this approach, connected products like smart televisions or thermostats that "work with Alexa" can respond to user voice requests and associated directives received from the Alexa cloud (Figure 1). This summarizes AVS and provides a glimpse into its potential.

Figure 1: By building Alexa apps, or Skills, developers can enable connected products to interact with users' spoken commands through Amazon Echo products. (Image source: Amazon Web Services)

Figure 1: By building Alexa apps, or Skills, developers can enable connected products to interact with users' spoken commands through Amazon Echo products. (Image source: Amazon Web Services)

Alexa Built-in design

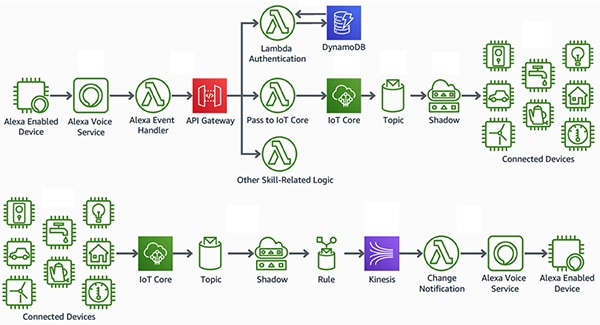

In contrast to products that "work with Alexa," smart products with "Alexa Built-in" achieve a more seamless, low-latency interface between the Alexa voice-enabled device and AWS resources. These products integrate AVS directly into a connected device design. Using AVS in combination with the AWS IoT Core platform, developers can implement sophisticated IoT applications that allow users to use voice commands with Alexa-enabled products to both control connected devices and to receive voice responses from those devices (Figure 2).

Figure 2: Alexa-enabled devices provide users with a voice interface for controlling devices (top) or receiving notifications from IoT devices (bottom) connected to Amazon Web Services resources through AWS IoT Core. (Image source: Amazon Web Services)

Figure 2: Alexa-enabled devices provide users with a voice interface for controlling devices (top) or receiving notifications from IoT devices (bottom) connected to Amazon Web Services resources through AWS IoT Core. (Image source: Amazon Web Services)

In the past, however, designs for the Alexa-enabled devices at the heart of this kind of IoT application have required major design efforts on their own. To use cloud-based Alexa services, a device would need to run the multiple AVS service libraries provided through the AVS device software development kit (SDK) running on Android or Linux platforms to detect the wake word, communicate with the Alexa cloud, and process directives for supported capabilities (Figure 3).

Figure 3: This diagram illustrates components of the AVS Device SDK and how data flows between them. (Image source: Amazon Web Services)

Figure 3: This diagram illustrates components of the AVS Device SDK and how data flows between them. (Image source: Amazon Web Services)

To support these service libraries, Alexa-enabled device designs would typically need a high-performance application processor and at least 50 megabytes (Mbytes) of memory to meet AVS processing demands. In addition, these designs often would incorporate a digital signal processor (DSP) to execute the complex algorithms required to extract voice audio from noisy environments and support far-field voice capabilities expected for voice assistant devices. Ultimately, the system requirements for building an effective Alexa-enabled device have typically gone well beyond the level of cost and complexity needed for practical IoT devices.

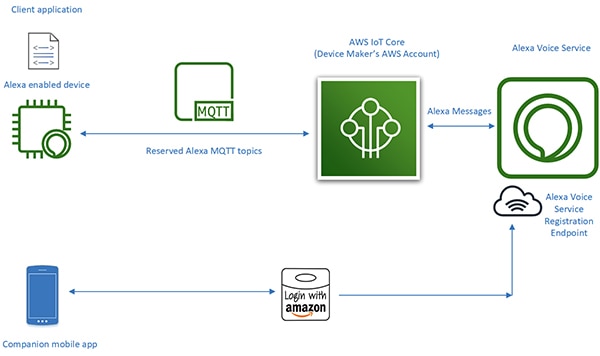

With the release of its AVS Integration for AWS IoT Core, however, Amazon dramatically slashed the processor workload and memory footprint required to implement Alexa Built-in products. With this service, compute and memory intensive tasks are shifted from the Alexa-enabled device to an associated virtual device in the cloud (Figure 4).

Figure 4: AVS for AWS IoT Core shifts memory and processing intensive tasks to the cloud to enable implementation of Alexa voice assistant capabilities on resource-constrained IoT devices. (Image source: Amazon Web Services)

Figure 4: AVS for AWS IoT Core shifts memory and processing intensive tasks to the cloud to enable implementation of Alexa voice assistant capabilities on resource-constrained IoT devices. (Image source: Amazon Web Services)

The physical device's processing responsibilities are reduced to providing more basic services such as secure messaging, reliable audio data delivery to and from Alexa, task management, and event notifications within the device and with Alexa. Transfer of data, commands, and notifications between the physical device and Alexa take place through efficient MQTT (MQ Telemetry Transport) messaging, using a few reserved topics in the MQTT publish-subscribe protocol. Finally, a companion mobile app interacts with the Alexa cloud for device registration and any additional required user interaction with the Alexa-enabled device.

In shifting heavy processing to the cloud, AVS for AWS IoT Core enables developers to create Alexa Built-in products with platforms that are more familiar to embedded systems developers. Rather than application processors with 50 Mbytes of memory running on Linux or Android, developers can implement these designs with more modest microcontrollers with less than 1 Mbyte RAM running real-time operating system (RTOS) software. In fact, Alexa-enabled designs built with AVS for AWS IoT Core can achieve a 50% reduction in the bill of materials compared with designs built to run the full suite of AVS services locally.

Although AVS for AWS IoT Core supports a more cost-effective runtime platform, implementation of a certified Alexa Built-in product remains a complex undertaking. Developers new to AVS and IoT Core can face a substantial learning curve in working through AWS requirements for security, communications, account management, user experience (UX) design, and much more. Regardless of their familiarity with the AWS ecosystem, all Alexa product developers must ensure that their designs meet a lengthy set of specifications and requirements for earning Amazon Alexa certification.

NXP's microcontroller-based solution for Alexa provides a turnkey system solution that fully implements the device-side hardware and software requirements for Amazon AVS for AWS IoT Core.

Microcontroller-based Alexa solution

Built around the NXP i.MX RT106A microcontroller, the NXP SLN-ALEXA-IOT AVS kit provides out-of-the box AWS connectivity, AVS qualified far-field audio algorithms, echo cancellation, Alexa wake word capability, and application code. Based on an Arm Cortex-M7 core, the kit's i.MX RT106A microcontroller is a member of NXP's i.MX RT106x family of crossover processors designed specifically for IoT edge computing. Built for embedded voice applications, the RT106A adds specialized functionality to the NXP i.MX RT1060 crossover processor family's base architecture's comprehensive set of peripheral interfaces, extensive internal memory, and broad support for external memory options (Figure 5).

Figure 5: The NXP i.MX RT1060 crossover processor family integrates an Arm Cortex-M7 microcontroller core with a full set of peripheral interfaces, memory, and other capabilities typically required in an IoT device. (Image source: NXP)

Figure 5: The NXP i.MX RT1060 crossover processor family integrates an Arm Cortex-M7 microcontroller core with a full set of peripheral interfaces, memory, and other capabilities typically required in an IoT device. (Image source: NXP)

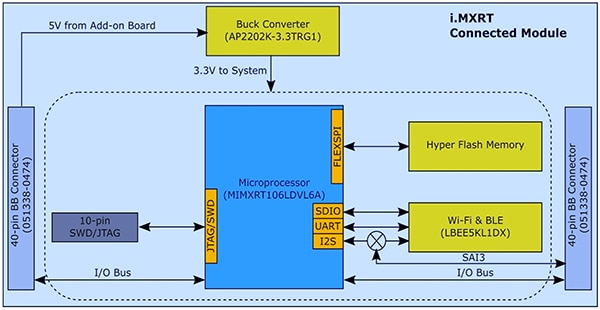

With its integrated functionality, the i.MX RT106A microcontroller needs only a few additional components to provide the hardware foundation required for implementing AVS for AWS IoT Core. In its SLN-ALEXA-IOT kit, NXP integrates the i.MX RT106A microcontroller in a system module with 256 megabits (Mb) flash memory, Murata Electronics’ LBEE5KL1DX Wi-Fi/Bluetooth transceiver module, and Diodes’ AP2202K-3.3TRG1 buck converter (Figure 6).

Figure 6: The design of the NXP SLN-ALEXA-IOT AVS kit system module takes advantage of the simple hardware interface required for integrating the NXP i.MX RT106A microcontroller with external flash and a wireless transceiver. (Image source: NXP)

Figure 6: The design of the NXP SLN-ALEXA-IOT AVS kit system module takes advantage of the simple hardware interface required for integrating the NXP i.MX RT106A microcontroller with external flash and a wireless transceiver. (Image source: NXP)

Complementing this system module, a voice board in the SLN-ALEXA-IOT kit provides three Knowles SPH0641LM4H-1 pulse-density modulation (PDM) MEMS microphones, a PUI Audio AS01808AO speaker, and an NXP TFA9894D class-D audio amplifier. Along with a USB Type C connector for powering the kit and for running a shell console from a personal computer, the voice board provides headers for Ethernet, serial peripherals, and i.MX RT106A microcontroller general purpose input/output (GPIO). Finally, the board includes switches for basic control input as well as LEDs for visual feedback compliant with Amazon AVS UX Attention System requirements using different LED colors and on/off cycling patterns.

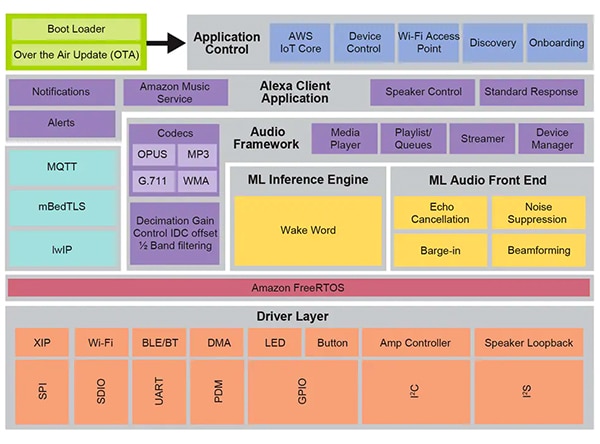

With its system module and voice board, the SLN-ALEXA-IOT hardware provides a complete platform for device-side processing of AVS for AWS IoT Core software. As noted earlier, however, Alexa-enabled IoT device designs depend as much on optimized software as hardware. Creating that software from scratch with Amazon's AVS for AWS IoT API can significantly delay projects as developers work through the process of building the required data objects and implementing the associated protocols. Further delays can arise as developers, working toward Alexa Built-in certification, try to comply with the AVS UX Attention System, AWS security practices, and other requirements touching on each aspect of users' interactions with Alexa services in the design. NXP addresses these concerns with its comprehensive runtime voice-control software environment based on Amazon FreeRTOS built on a layer of software drivers for execute-in-place (XIP) flash, connectivity, and other hardware components (Figure 7).

Figure 7: Built on Amazon FreeRTOS, the NXP voice control system environment provides an extensive set of middleware services including firmware routines for machine-learning inference and audio front-end processing. (Image source: NXP)

Figure 7: Built on Amazon FreeRTOS, the NXP voice control system environment provides an extensive set of middleware services including firmware routines for machine-learning inference and audio front-end processing. (Image source: NXP)

Underlying this software environment's voice processing capabilities, NXP's Intelligent Toolbox firmware provides optimized functions for all audio tasks including the machine-learning (ML) inference engine and ML audio front-end for audio signal conditioning and optimization. Other middleware services support secure connectivity, AWS communications, and audio capabilities. Above this comprehensive service layer, software for AWS IoT Core, onboarding, and other application control features mediates startup from a two-stage bootloader with support for over-the-air (OTA) updates built on the AWS IoT OTA service and Amazon FreeRTOS OTA client.

Using the factory installed software running on this environment, developers can immediately bring up the SLN-ALEXA-IOT hardware kit with a complete Alexa-enabled application designed to use an NXP demonstration account for AWS IoT. NXP documentation provides a detailed walkthrough describing the process of bringing up the kit, provisioning Wi-Fi credentials, and completing AWS device authentication with the demonstration account. As part of this process, developers interact with the kit and AWS using an Android mobile app included in the software distribution package, which is available from the NXP site using an activation code provided with each SLN-ALEXA-IOT kit. After a few straightforward steps, developers can immediately begin interacting with the kit through the same sort of Alexa voice interactions provided by Echo smart speakers.

For rapidly prototyping products with Alexa capabilities, the SLN-ALEXA-IOT kit and factory installed software provide a ready platform. At the same time, the kit hardware and software serve as a rapid development platform for creating custom Alexa-enabled designs based on the i.MX RT106A microcontroller.

Custom development

Software for i.MX RT106A-based Alexa solutions leverages the capabilities of the NXP voice control runtime environment through the NXP MCU Alexa Voice IoT SDK provided as part of the software distribution package available on product activation. Designed as an add-on to NXP's Eclipse-based MCUXpresso integrated development environment (IDE), this SDK combines full source code for sample applications, drivers, and middleware with headers for binary distributions of specialized firmware capabilities such as the NXP Intelligent Toolbox, ML inference engine, and ML audio front-end.

Developers who need to quickly deploy an Alexa-enabled product can in principle use the full Alexa demonstration application with minor modifications. In the simplest case, these modifications would simply retarget the application to the developer's own AWS account using their own security credentials. NXP provides a step-by-step description for completing this process.

For custom development, the sample applications included in the software distribution provide executable examples showing how to work with the NXP MCU Alexa Voice IoT SDK. Rather than jumping directly into the full Alexa demonstration application, developers can explore sample applications that let them more tightly focus on specific capabilities including the audio front-end, Wi-Fi and Bluetooth connectivity, bootloading, and others. For example, the audio front-end sample application illustrates the basic design patterns for performing wake word detection using Amazon FreeRTOS tasks.

In the audio front-end sample application, the main routine demonstrates how developers would initialize hardware and software subsystems and then use the FreeRTOS xTaskCreate function to launch the main application task (appTask) and a console shell task (sln_shell_task) before releasing control to the FreeRTOS scheduler (Listing 1). (Note: the vTaskStartScheduler call to start the FreeRTOS scheduler would only return if the scheduler lacks sufficient memory.)

Copy

void main(void)

{

/* Enable additional fault handlers */

SCB->SHCSR |= (SCB_SHCSR_BUSFAULTENA_Msk | /*SCB_SHCSR_USGFAULTENA_Msk |*/ SCB_SHCSR_MEMFAULTENA_Msk);

/* Init board hardware. */

BOARD_ConfigMPU();

BOARD_InitBootPins();

BOARD_BootClockRUN();

.

.

.

RGB_LED_Init();

RGB_LED_SetColor(LED_COLOR_GREEN);

sln_shell_init();

xTaskCreate(appTask, "APP_Task", 512, NULL, configMAX_PRIORITIES - 1, &appTaskHandle);

xTaskCreate(sln_shell_task, "Shell_Task", 1024, NULL, tskIDLE_PRIORITY + 1, NULL);

/* Run RTOS */

vTaskStartScheduler();

.

.

.

}

Listing 1: Included in the NXP MCU Alexa Voice IoT SDK distribution, a sample audio front-end application demonstrates basic initialization requirements and creation of FreeRTOS tasks for the main application task and a console shell task. (Code source: NXP)

After initializing the audio subsystem, the main application task appTask in turn launches a pair of FreeRTOS tasks. One task runs a service routine, audio_processing_task, that processes audio input, while the other handles conversion of microphone PDM output to pulse-code modulation (PCM). After additional housekeeping, appTask settles into an endless loop, waiting for an RTOS notification signifying that the wake word was detected (Listing 2).

Copy

void appTask(void *arg)

{

.

.

.

// Create audio processing task

if (xTaskCreate(audio_processing_task, "Audio_processing_task", 1536U, NULL, audio_processing_task_PRIORITY,

&xAudioProcessingTaskHandle) != pdPASS)

.

.

.

// Create pdm to pcm task

if (xTaskCreate(pdm_to_pcm_task, "pdm_to_pcm_task", 1024U, NULL, pdm_to_pcm_task_PRIORITY, &xPdmToPcmTaskHandle) !=

pdPASS)

.

.

.

RGB_LED_SetColor(LED_COLOR_OFF);

SLN_AMP_WriteDefault();

uint32_t taskNotification = 0;

while (1)

{

xTaskNotifyWait(0xffffffffU, 0xffffffffU, &taskNotification, portMAX_DELAY);

switch (taskNotification)

{

case kWakeWordDetected:

{

RGB_LED_SetColor(LED_COLOR_BLUE);

vTaskDelay(100);

RGB_LED_SetColor(LED_COLOR_OFF);

break;

}

default:

break;

}

taskNotification = 0;

}

}

Listing 2: In the sample audio front-end application, the main application task, appTask, launches tasks to handle audio processing and microphone data conversion and then waits for a FreeRTOS notification (taskNotification) that the wake word was detected (kWakeWordDetected). (Code source: NXP)

In turn, the audio processing task in this sample application initializes the wake word firmware function and initializes wake word detection parameters before it also enters an endless loop where it waits for a FreeRTOS notification from the microphone data conversion task that processed microphone data is available. At that point, the audio processing task calls Intelligent Toolbox firmware functions that process the audio data and perform wake word detection using the ML inference engine (Listing 3).

Copy

void audio_processing_task(void *pvParameters)

{

.

.

.

SLN_AMAZON_WAKE_Initialize();

SLN_AMAZON_WAKE_SetWakeupDetectedParams(&wakeWordActive, &wwLen);

while (1)

{

// Suspend waiting to be activated when receiving PDM mic data after Decimation

xTaskNotifyWait(0U, ULONG_MAX, &taskNotification, portMAX_DELAY);

.

.

.

// Process microphone streams

int16_t *pcmIn = (int16_t *)((*s_micInputStream)[pingPongIdx]);

SLN_Voice_Process_Audio(g_externallyAllocatedMem, pcmIn,

&s_ampInputStream[pingPongAmpIdx * PCM_SINGLE_CH_SMPL_COUNT], &cleanAudioBuff, NULL,

NULL);

// Pass output of AFE to wake word

SLN_AMAZON_WAKE_ProcessWakeWord(cleanAudioBuff, 320);

taskNotification &= ~currentEvent;

if (wakeWordActive)

{

wakeWordActive = 0U;

// Notify App Task Wake Word Detected

xTaskNotify(s_appTask, kWakeWordDetected, eSetBits);

}

}

}

Listing 3: In the sample audio front-end application, the audio processing task initializes the firmware wake word engine, waits for a FreeRTOS notification that microphone data is available, and finally calls NXP Intelligent Toolbox firmware analog front-end (SLN_Voice_Process_Audio) and ML inference engine (SLN_AMAZON_WAKE_ProcessWakeWord) for wake word detection. (Code source: NXP)

After wake word detection, the audio processing task issues a FreeRTOS task notification to notify the main application task, appTask, of that event. On receipt of that notification, appTask momentarily flashes a blue color LED (see Listing 2 again).

The complete Alexa sample application relies on the same patterns described for the simpler audio front-end application but substantially extends that basic code base to support full Alexa functionality. For example, after the ML inference engine detects the wake word in the Alexa sample application, the audio processing task performs a series of FreeRTOS notifications associated with each stage in the Alexa processing sequence (Listing 4).

Copy

void audio_processing_task(void *pvParameters)

{

.

.

.

SLN_AMAZON_WAKE_Initialize();

SLN_AMAZON_WAKE_SetWakeupDetectedParams(&u8WakeWordActive, &wwLen);

while (1)

{

// Suspend waiting to be activated when receiving PDM mic data after Decimation

xTaskNotifyWait(0U, ULONG_MAX, &taskNotification, portMAX_DELAY);

.

.

.

int16_t *pcmIn = (int16_t *)((*s_micInputStream)[pingPongIdx]);

SLN_Voice_Process_Audio(g_w8ExternallyAllocatedMem, pcmIn,

&s_ampInputStream[pingPongAmpIdx * PCM_SINGLE_CH_SMPL_COUNT], &pu8CleanAudioBuff, NULL,

NULL);

SLN_AMAZON_WAKE_ProcessWakeWord((int16_t*)pu8CleanAudioBuff, 320);

taskNotification &= ~currentEvent;

// If devices is muted, then skip over state machine

if (s_micMuteMode)

{

if (u8WakeWordActive)

{

u8WakeWordActive = 0U;

}

memset(pu8CleanAudioBuff, 0x00, AUDIO_QUEUE_ITEM_LEN_BYTES);

}

if (u8WakeWordActive)

{

configPRINTF(("Wake word detected locally\r\n"));

}

// Execute intended state

switch (s_audioProcessingState)

{

case kIdle:

/* add clean buff to cloud wake word ring buffer */

continuous_utterance_samples_add(pu8CleanAudioBuff, PCM_SINGLE_CH_SMPL_COUNT * PCM_SAMPLE_SIZE_BYTES);

if (u8WakeWordActive)

{

continuous_utterance_buffer_set(&cloud_buffer, &cloud_buffer_len, wwLen);

u8WakeWordActive = 0U;

wwLen = 0;

// Notify App Task Wake Word Detected

xTaskNotify(s_appTask, kWakeWordDetected, eSetBits);

// App Task will now determine if we begin recording/publishing data

}

break;

.

.

.

case kWakeWordDetected:

audio_processing_reset_mic_capture_buffers();

// Notify App_Task to indicate recording

xTaskNotify(s_appTask, kMicRecording, eSetBits);

if (s_audioProcessingState != kMicRecording)

{

s_audioProcessingState = kMicCloudWakeVerifier;

}

configPRINTF(("[audio processing] Mic Recording Start.\r\n"));

// Roll into next state

case kMicCloudWakeVerifier:

case kMicRecording:

micRecordingLen = AUDIO_QUEUE_ITEM_LEN_BYTES;

if (u8WakeWordActive)

{

u8WakeWordActive = 0U;

}

// Push data into buffer for consumption by AIS task

status = audio_processing_push_mic_data(&pu8CleanAudioBuff, &micRecordingLen);

.

.

.

}

}

Listing 4: In the full Alexa application, the audio processing task augments the processing steps performed in the audio front-end application with additional code for managing the subsequent audio processing stages in the Alexa sequence. (Code source: NXP)

After local ML wake word detection, the full Alexa application's audio processing task notifies the main application task as described before. In addition, it now must manage audio processing states where the microphone remains open to capture the complete audio input for speech processing in the Alexa cloud without losing the original data stream containing the locally detected wake word. This complete data stream is passed to the Alexa cloud for wake word verification as well as further speech processing.

At each stage in this processing sequence, the audio processing task issues corresponding FreeRTOS notifications to the main application task. As with the audio processing task, the full Alexa application extends the main application task pattern presented in simpler form in the audio front-end application. Here, the full Alexa application's main application task, appTask, both generates events for transmission to the Alexa cloud and for management of the kit's LEDs in compliance with Amazon AVS UX Attention System requirements. For example, when keeping the microphone open after wake word detection, the audio processing task notifies the main application task, which sets the appropriate UX attention state (solid cyan LED indicator) (see yellow highlights in Listing 5 and corresponding highlight in Listing 4 again).

Copy

void appTask(void *arg)

{

.

.

.

// Create audio processing task

if (xTaskCreate(audio_processing_task, "Audio_proc_task", 1536U, NULL, audio_processing_task_PRIORITY, &xAudioProcessingTaskHandle) != pdPASS)

.

.

.

// Create pdm to pcm task

if (xTaskCreate(pdm_to_pcm_task, "pdm_to_pcm_task", 512U, NULL, pdm_to_pcm_task_PRIORITY, &xPdmToPcmTaskHandle) != pdPASS)

.

.

.

while(1)

{

xTaskNotifyWait( ULONG_MAX, ULONG_MAX, &taskNotification, portMAX_DELAY );

if (kIdle & taskNotification)

{

// Set UX attention state

ux_attention_set_state(uxIdle);

taskNotification &= ~kIdle;

}

if (kWakeWordDetected & taskNotification)

{

if (reconnection_task_get_state() == kStartState)

{

if (!AIS_CheckState(&aisHandle, AIS_TASK_STATE_MICROPHONE))

{

// Set UX attention state

ux_attention_set_state(uxListeningStart);

.

.

.

// Begin sending speech

micOpen.wwStart = aisHandle.micStream.audio.audioData.offset + 16000;

micOpen.wwEnd = aisHandle.micStream.audio.audioData.offset + audio_processing_get_wake_word_end();

micOpen.initiator = AIS_INITIATOR_WAKEWORD;

AIS_EventMicrophoneOpened(&aisHandle, &micOpen);

// We are now recording

audio_processing_set_state(kWakeWordDetected);

}

}

else

{

ux_attention_set_state(uxDisconnected);

audio_processing_set_state(kReconnect);

}

taskNotification &= ~kWakeWordDetected;

}

.

.

.

if (kMicRecording & taskNotification)

{

// Set UX attention state and check if mute is active

// so we don't confuse the user

if (audio_processing_get_mic_mute())

{

ux_attention_set_state(uxMicOntoOff);

}

else

{

ux_attention_set_state(uxListeningActive);

}

taskNotification &= ~kMicRecording;

}

.

.

.

Listing 5: In the full Alexa application, the main application task orchestrates the Alexa processing sequence including controlling LED lights in compliance with Alexa certification requirements. (Code source: NXP)

The main routine in the full Alexa application similarly extends the pattern demonstrated in simpler form in the audio front-end application. In this case, the main application also creates additional FreeRTOS tasks for a more substantial initialization procedure as well as tasks to handle the kit's buttons and support OTA updates.

By building on these sample applications, developers can confidently implement Alexa Built-in functionality using the NXP MCU Alexa Voice IoT SDK in their own i.MX RT106A-based designs. Because it takes full advantage of AVS for AWS IoT Core, this execution platform enables developers to deploy Alexa-enabled solutions more broadly in low-cost resource-constrained devices at the foundation of increasingly sophisticated IoT applications.

Conclusion

Amazon Alexa Voice Service has enabled developers to implement the same voice assistant functionality that is driving the rapid growth of Echo smart speakers. In the past, products able to earn the coveted Alexa Built-in certification required execution platforms with substantial local memory and high-performance processing capabilities. Built with AVS for AWS IoT Core, a kit from NXP provides a drop-in solution for Alexa Built-in functionality using a microcontroller and an associated software execution environment.

Disclaimer: The opinions, beliefs, and viewpoints expressed by the various authors and/or forum participants on this website do not necessarily reflect the opinions, beliefs, and viewpoints of DigiKey or official policies of DigiKey.